Table of Contents

Автоматическое извлечение семантических признаков для предсказания дефектов

Оригинал: Song Wang, Taiyue Liu, and Lin Tan. 2016. Automatically learning semantic features for defect prediction. In Proceedings of the 38th International Conference on Software Engineering (ICSE '16). ACM, New York, NY, USA, 297-308. DOI: https://doi.org/10.1145/2884781.2884804

Введение

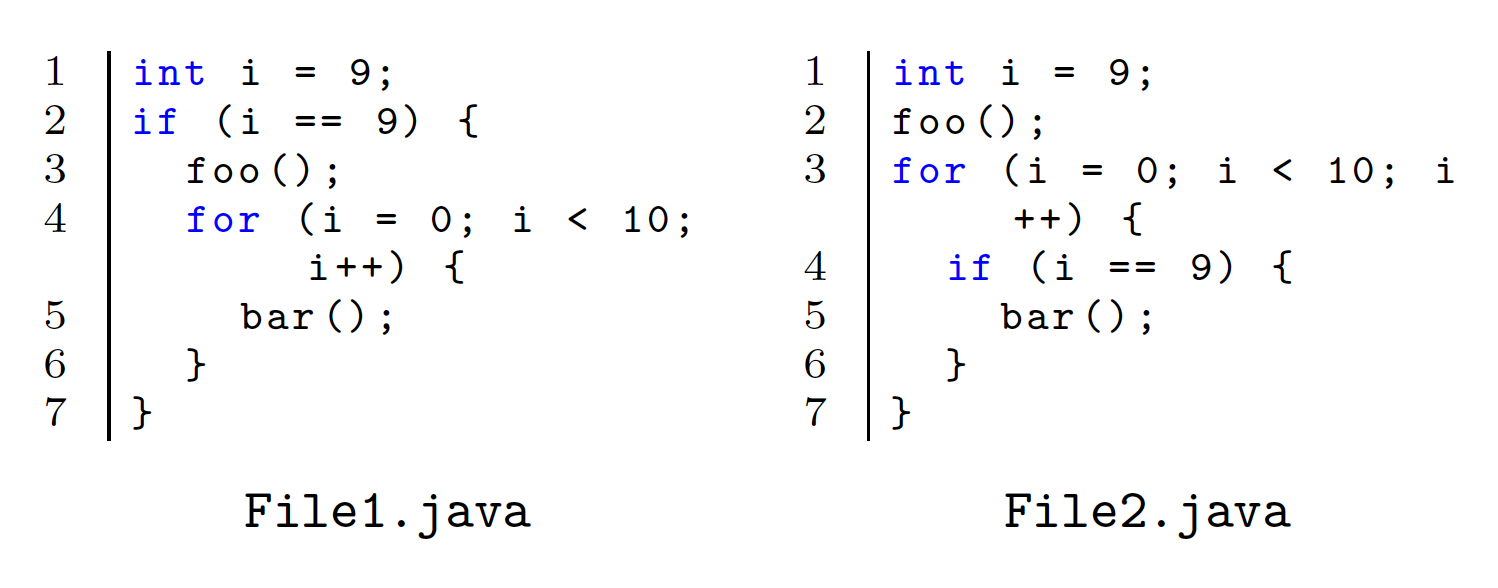

Предсказание дефектных блоков кода в программном обеспечении помогает разработчикам находить баги и приоритизировать тестировщикам свои усилия. Предсказания с помощью использования стандартных фичей для таких предсказаний часто работает неправильно в случае семантических различий в программах, а это необходимо для построения качественных предсказательных моделей. В статье предлагается использовать Deep Belief Network (Глубокая сеть доверия) для конструирования семантических признаков из векторов токенов программы, так как традиционные методы извлечения признаков часто дают одинаковые вектора описаний программ и не способны различить сильно отличающиеся по семантике программы. Вот мотивирующий пример того, как разные коды традиционными методами получат одинаковые признаковые описания:

Основная часть

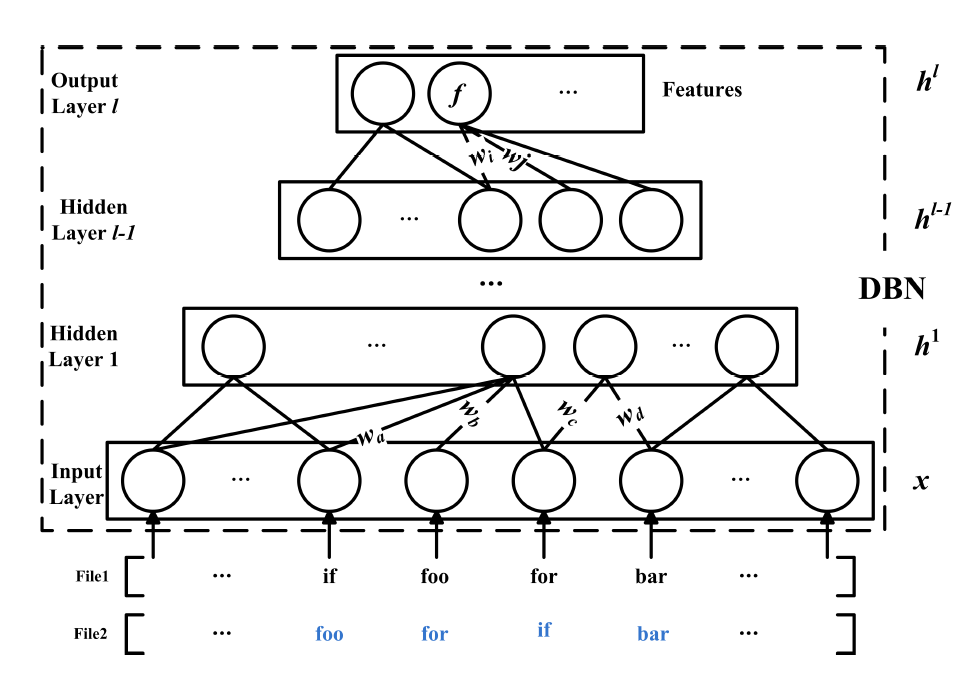

Deep Belief Network - генеративная графическая модель, которая использует многослойную нейронную сеть для изучения представления данных и которая с большой вероятностью может сконструировать семантику и контент входа. DBN состоит из входного, скрытых и верхнего слоя. Для вычисления вероятности P(h_k|h_{k+1}) каждая пара смежных слоёв обучается, как Restricted Boltzmann machine (Ограниченная машина Больцмана).

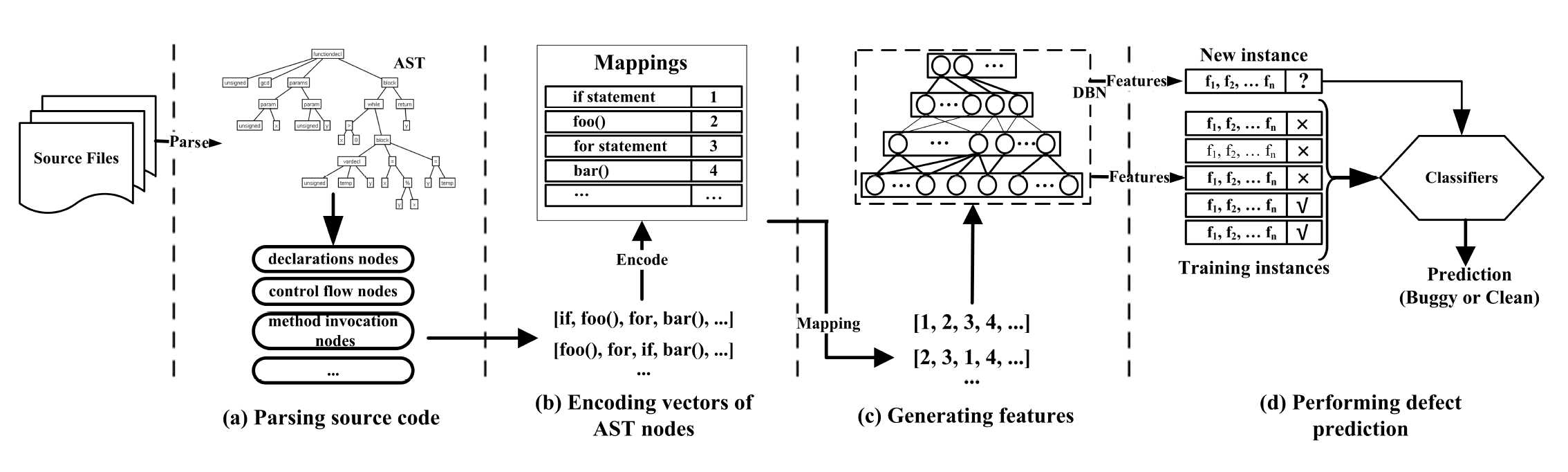

Подход состоит из 4 этапов:

Подход состоит из 4 этапов:

- Парсинг исходного кода в вектор токенов

- Перевод токенов в числовые представления, которые ожидает на вход DBN

- Использование DBN для автоматической генерации семантических признаков

- Построение предсказательных моделей дефектов и предсказание дефектов с использованием выученных семантических признаков из train и test данных.

Синтаксическая информация из кода извлекается с помощью AST (Abstract Syntax Tree). Так как в каждой программе имена сильно привязаны к задаче, то, чтобы не переобучиться на их названия, извлекаются фичи вида: method declarations и method invocations, например getXXX, setXXX, setAttributeXXX, getAttributeXXX.

Важный момент для обучения: шум и неправильная маркировка дефектных данных, что может сильно усложнить задачу качественного обучения модели. Чтобы идентифицировать шум, был предложен эффективный способ обнаружения: Closest List Noise Identification (CLNI). Он анализирует метки k ближайших соседей, и, если вдруг у данного объекта оказалась противоположная метка (в сравнении с k ближайшими соседями), то он приравнивается к шуму. Однако такой подход не может быть напрямую применён к нашей задаче в виду того, что в нём берётся евклидово расстояние (различие между значениями на фиксированных позициях векторов описаний означает лишь различие токенов). Поэтому в качестве функции расстояния берётся расстояние Левенштейна. С помощью этого подхода также корректируются и некорректно размеченные данные.

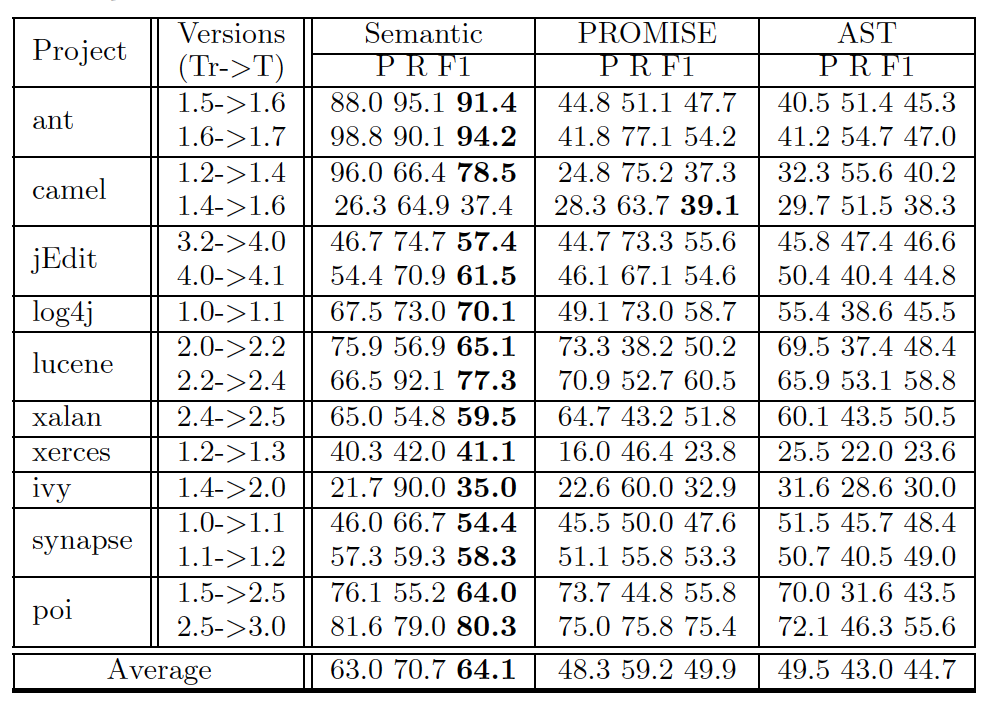

Во время обучения DBN необходимо подтьюнить 3 параметра:

- количество скрытых слоёв

- количество узлов в каждом слое

- количество итераций обучения

Для упрощения модели полагается количество узлов одинаковым в каждом слое.

Общая структура системы по обнаружению дефектов выглядит так:

Для оценки качества предсказания используются 3 стандартные метрики задач классификации: precision, recall, F1. Для возможности воспроизведения и проверки результатов данные брались из открытого репозитория PROMISE.

Заключение

В качестве бейзлайнов использовалось 2 алгоритма:

- Алгоритм, использующий 20 заранее придуманных признаков, таких как: количество строк кода, количество операторов и операндов, количество методов в классе, позиция в классе дерева наследования, метрики сложности МакКэба и т.д..

- Алгоритм, использующий вершины дерева AST (после исправления шума). Код представляется, как вектор встречаемости термов в AST-узлах.

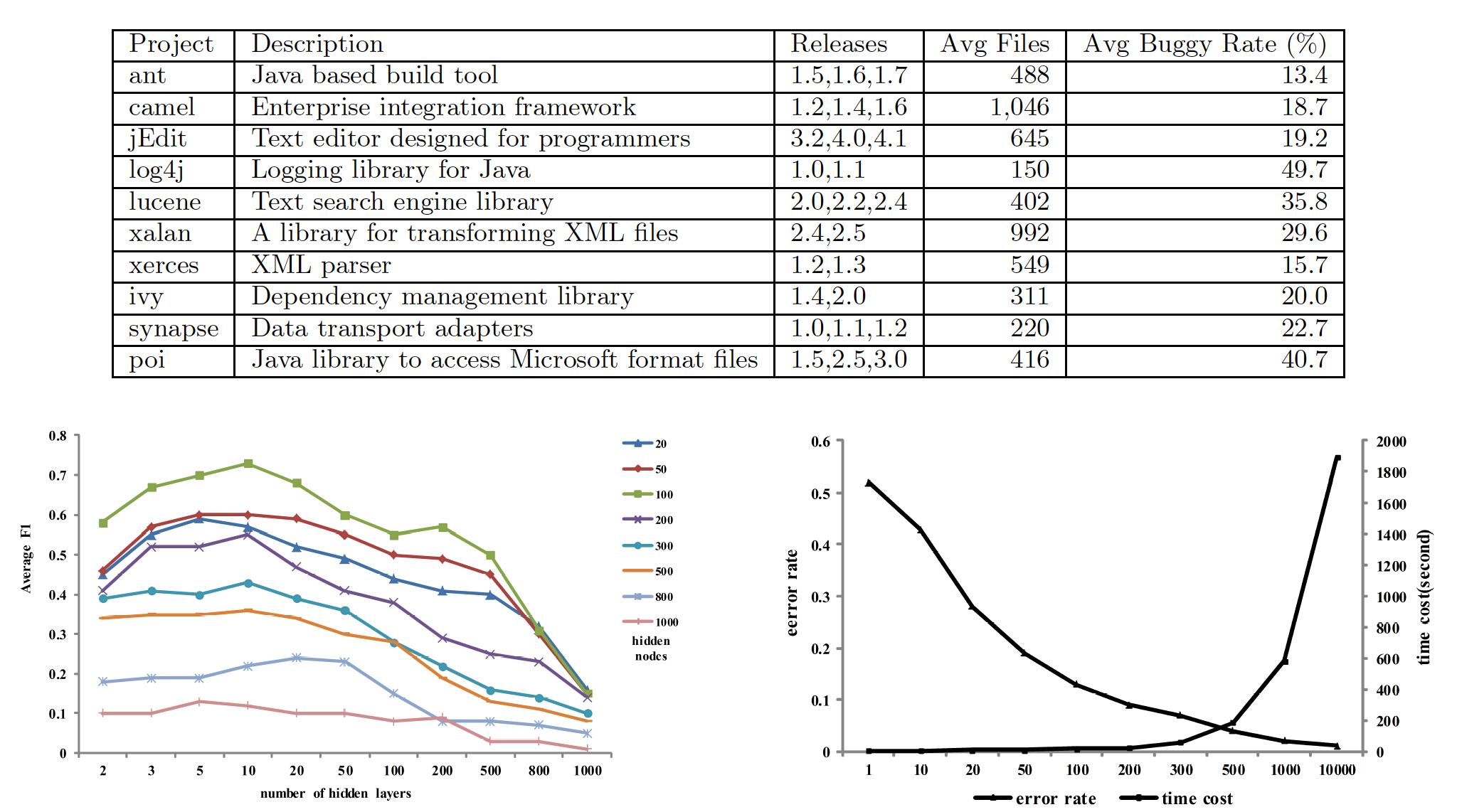

Ниже представлены датасеты, используемые для измерения качества, а также график зависимости качества от количества скрытых компонент и график зависиости величины ошибки от времени работы алгоритма.

В статье приводится большое число различных вариантов тестирования качества:

- при фиксированном проекте (within-project): обучение на части файлов, предсказание для других

- обучение на файлах одного проекта, предсказание для файлов другого (cross-project)

- использование различных алгоритмов для принятия решений на семантических признаках от DBN(ADTree, Naive Bayes, Logistic Regression)

В общем и целом, подход с DBN показывает более высокое качество более, чем на 90% проектах во всех способах, вот одна из таблиц сравнения результатов:

Ссылки

- S. Amasaki, Y. Takagi, O. Mizuno, and T. Kikuno. A Bayesian Belief Network for Assessing the Likelihood of Fault Content. In ISSRE’03, pages 215–226.

- Y. Bengio. Learning Deep Architectures for AI. Foundations and Trends in Machine Learning, 2(1):1–127, 2009.

- D. M. Blei, A. Y. Ng, and M. I. Jordan. Latent dirichlet allocation. the Journal of machine Learning research, 3:993–1022, 2003.

- T.-H. Chen, S. W. Thomas, M. Nagappan, and A. E. Hassan. Explaining software defects using topic models. In MSR’12, pages 189–198.

- S. R. Chidamber and C. F. Kemerer. A Metrics Suite for Object Oriented Design. TSE’94, 20(6):476–493.

- D. Ciresan, U. Meier, and J. Schmidhuber. Multi-column deep neural networks for image classification. In CVPR’12, pages 3642–3649.

- F. B. e Abreu and R. Carapuça. Candidate metrics for object-oriented software within a taxonomy framework. JSS’94, 26(1):87–96.

- K. O. Elish and M. O. Elish. Predicting defect-prone software modules using support vector machines. JSS’08, 81(5):649–660.

- N. Gayatri, S. Nickolas, A. Reddy, S. Reddy, and A. Nickolas. Feature selection using decision tree induction in class level metrics dataset for software defect predictions. In WCECS’10, pages 124–129.

- M. H. Halstead. Elements of Software Science (Operating and programming systems series). Elsevier Science Inc., 1977.

- R. Harrison, S. J. Counsell, and R. V. Nithi. An evaluation of the mood set of object-oriented software metrics. TSE’98, 24(6):491–496.

- A. E. Hassan. Predicting faults using the complexity of code changes. In ICSE’09, pages 78–88.

- Z. He, F. Peters, T. Menzies, and Y. Yang. Learning from open-source projects: An empirical study on defect prediction. In ESEM’13, pages 45–54.

- K. Herzig, S. Just, and A. Zeller. It’s not a bug, it’s a feature: how misclassification impacts bug prediction. In ICSE’13, pages 392–401.

- A. Hindle, E. T. Barr, Z. Su, M. Gabel, and P. Devanbu. On the naturalness of software. In ICSE’12, pages 837–847.

- G. E. Hinton, S. Osindero, and Y.-W. Teh. A fast learning algorithm for deep belief nets. Neural computation’06, 18(7):1527–1554.

- G. E. Hinton and R. R. Salakhutdinov. Reducing the dimensionality of data with neural networks. Science’06, 313(5786):504–507.

- T. Jiang, L. Tan, and S. Kim. Personalized defect prediction. In ASE’13, pages 279–289.

- X. Jing, F. Wu, X. Dong, F. Qi, and B. Xu. Heterogeneous cross-company defect prediction by unified metric representation and cca-based transfer learning. In FSE’15, pages 496–507.

- X.-Y. Jing, S. Ying, Z.-W. Zhang, S.-S. Wu, and J. Liu. Dictionary learning based software defect prediction. In ICSE’14, pages 414–423.

- T. Khoshgoftaar and N. Seliya. Tree-based software quality estimation models for fault prediction. In Software Metrics’02, pages 203–214.307

- S. Kim, H. Zhang, R. Wu, and L. Gong. Dealing with noise in defect prediction. In ICSE’11, pages 481–490.

- S. Kim, T. Zimmermann, E. J. Whitehead Jr, and A. Zeller. Predicting faults from cached history. In ICSE’07, pages 489–498.

- S. Kombrink, T. Mikolov, M. Karafiát, and L. Burget. Recurrent neural network based language modeling in meeting recognition. In INTERSPEECH’11, pages 2877–2880.

- A. Krizhevsky, I. Sutskever, and G. E. Hinton. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems’12, pages 1097–1105.

- A. Lam, A. Nguyen, H. Nguyen, and T. Nguyen. Combining deep learning with information retrieval to localize buggy files for bug reports. In ASE’15, pages 476–481.

- T. Lee, J. Nam, D. Han, S. Kim, and H. P. In. Micro interaction metrics for defect prediction. In FSE’11,pages 311–321.

- Z. Li and Y. Zhou. Pr-miner: automatically extracting implicit programming rules and detecting violations in large software code. In FSE’05, pages 306–315.

- Y. Liu, D. Poshyvanyk, R. Ferenc, T. Gyimóthy, and N. Chrisochoides. Modeling class cohesion as mixtures of latent topics. In ICSM’09, pages 233–242.

- C. D. Manning and H. Schütze. Foundations of statistical natural language processing. MIT press, 1999.

- T. J. McCabe. A complexity measure. TSE’76, (4):308–320.

- A. Meneely, L. Williams, W. Snipes, and J. Osborne. Predicting failures with developer networks and social network analysis. In FSE’08, pages 13–23.

- T. Menzies, J. Greenwald, and A. Frank. Data mining static code attributes to learn defect predictors. TSE’07, 33(1):2–13.

- T. Menzies, Z. Milton, B. Turhan, B. Cukic, Y. Jiang, and A. Bener. Defect prediction from static code features: current results, limitations, new approaches. ASE’10, 17(4):375–407.

- A. Mnih and G. E. Hinton. A scalable hierarchical distributed language model. In Advances in neural information processing systems’09, pages 1081–1088.

- A. Mohamed, D. Yu, and L. Deng. Investigation of full-sequence training of deep belief networks for speech recognition. In INTERSPEECH’10, pages 2846–2849.

- A.-r. Mohamed, G. E. Dahl, and G. Hinton. Acoustic modeling using deep belief networks. Audio, Speech, and Language Processing, IEEE Transactions on, 20(1):14–22, 2012.

- R. Moser, W. Pedrycz, and G. Succi. A comparative analysis of the efficiency of change metrics and static code attributes for defect prediction. In ICSE’08, pages 181–190.

- L. Mou, G. Li, Z. Jin, L. Zhang, and T. Wang. TBCNN: A tree-based convolutional neural network for programming language processing. arXiv preprint arXiv:1409.5718, 2014.

- N. Nagappan and T. Ball. Using software dependencies and churn metrics to predict field failures: An empirical case study. In ESEM’07, pages 364–373.

- J. Nam and S. Kim. Heterogeneous defect prediction. In FSE’15, pages 508–519.

- J. Nam, S. J. Pan, and S. Kim. Transfer defect learning. In ICSE’13, pages 382–391.

- G. Navarro. A guided tour to approximate string matching. ACM Comput. Surv., 33(1), 2001.

- A. T. Nguyen and T. N. Nguyen. Graph-based statistical language model for code. In ICSE’15, pages 858–868.

- A. T. Nguyen, T. T. Nguyen, T. N. Nguyen, D. Lo, and C. Sun. Duplicate bug report detection with a combination of information retrieval and topic modeling. In ASE’12, pages 70–79.

- T. T. Nguyen, H. A. Nguyen, N. H. Pham, J. M. Al-Kofahi, and T. N. Nguyen. Graph-based mining of multiple object usage patterns. In FSE’09, pages 383–392.

- T. T. Nguyen, T. N. Nguyen, and T. M. Phuong. Topic-based defect prediction. In ICSE’11, pages 932–935.

- T. J. Ostrand, E. J. Weyuker, and R. M. Bell. Programmer-based fault prediction. In PROMISE’10, pages 19:1–19:10.

- S. J. Pan, I. Tsang, J. Kwok, and Q. Yang. Domain adaptation via transfer component analysis. Neural Networks, IEEE Transactions on, pages 199–210, 2011.

- R. Pascanu, J. W. Stokes, H. Sanossian, M. Marinescu, and A. Thomas. Malware classification with recurrent networks. In ICASSP’15, pages 1916–1920.

- M. Pinzger, N. Nagappan, and B. Murphy. Can developer-module networks predict failures? In FSE’08, pages 2–12.

- F. Rahman and P. Devanbu. How, and why, process metrics are better. In ICSE’13, pages 432–441.

- V. Raychev, M. Vechev, and E. Yahav. Code completion with statistical language models. In PLDI’14, pages 419–428.

- R. Salakhutdinov and G. Hinton. Semantic hashing. RBM’07, 500(3):500.

- R. Sarikaya, G. E. Hinton, and A. Deoras. Application of deep belief networks for natural language understanding. Audio, Speech, and Language Processing, IEEE/ACM Transactions on, 22(4):778–784, 2014.

- A. Tamrawi, T. T. Nguyen, J. M. Al-Kofahi, and T. N. Nguyen. Fuzzy set and cache-based approach for bug triaging. In FSE’11, pages 365–375.

- M. Tan, L. Tan, S. Dara, and C. Mayeux. Online defect prediction for imbalanced data. In ICSE’15, pages 99–108.

- C. Tantithamthavorn, S. McIntosh, A. E. Hassan, A. Ihara, and K. ichi Matsumoto. The impact of mislabelling on the performance and interpretation of defect prediction models. In ICSE’15, pages 812–823.

- W. Tao and L. Wei-hua. Naive bayes software defect prediction model. In CiSE’10, pages 1–4.

- Z. Tu, Z. Su, and P. Devanbu. On the localness of software. In FSE’14, pages 269–280.

- B. Turhan, T. Menzies, A. B. Bener, and J. Di Stefano. On the relative value of cross-company and within-company data for defect prediction. Empirical Softw. Engg., 14(5):540–578, 2009.

- J. Wang, B. Shen, and Y. Chen. Compressed c4. 5 models for software defect prediction. In QSIC’12, pages 13–16.

- S. Watanabe, H. Kaiya, and K. Kaijiri. Adapting a fault prediction model to allow inter languagereuse. In Proceedings of the 4th International Workshop on Predictor Models in Software Engineering, pages 19–24, 2008.

- E. J. Weyuker, T. J. Ostrand, and R. M. Bell. Using developer information as a factor for fault prediction. In PROMISE’07, pages 8–8.

- M. White, C. Vendome, M. Linares-Vásquez, and D. Poshyvanyk. Toward deep learning software repositories. In MSR’15, pages 334–345.

- I. H. Witten and E. Frank. Data Mining: Practical machine learning tools and techniques. Morgan Kaufmann, 2005.

- X. Xie, W. Zhang, Y. Yang, and Q. Wang. Dretom: Developer recommendation based on topic models for bug resolution. In PROMISE’12, pages 19–28.

- X. Yang, D. Lo, X. xia, Y. Zhang, and J. Sun. Deep learning for just-in-time defect prediction. In QRS’15, pages 17–26.

- Z. Yuan, Y. Lu, Z. Wang, and Y. Xue. Droid-sec: Deep learning in android malware detection. In SIGCOMM’14, pages 371–372.

- T. Zimmermann, R. Premraj, and A. Zeller. Predicting defects for eclipse. In PROMISE’07, pages 9–9.