Reference architectures for high-load internet-scale systems

Introduction

High load internet systems are software and hardware complexes designed to efficiently process and service large amounts of data and queries in a scalable and distributed architecture. Such systems are focused on ensuring high performance, responsiveness and reliability when processing traffic generated by multiple users or devices in real time. High-load systems often include elements such as micro-service architecture, horizontal scaling, load balancing, data caching, as well as backup and disaster recovery strategies to ensure continuous operation of applications [1]. Such systems are aimed at ensuring high availability and processing requests in real time, which allows them to function effectively in conditions of increased user activity or heavy traffic.

Components of high-load internet systems

Microservice architecture

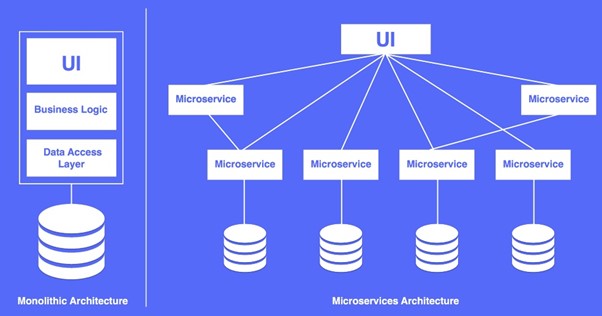

Microservice architecture is an approach to software development in which an application is divided into small, autonomous and interoperable services [2]. Each microservice is a separate logical application, usually developed independently of others. Microservices can use a variety of technologies, databases, and even programming languages within a single system. Microservices can use a variety of technologies, databases, and even programming languages within a single system.

In the context of high-load services, microservice architecture provides several advantages. First, it makes it easier to scale horizontally, allowing you to scale only those components that require additional resources, instead of scaling the entire application. This improves resource utilization and allows for more flexible response to load changes. Second, microservices provide high fault tolerance. If one of the services stops working, the rest can continue to function, which increases the stability of the system. Microservices also make it easier to make changes and updates, since they can be made to one specific service without affecting the rest.

The difference between a microservice architecture and a monolithic architecture is that in microservices, the functionality of an application is divided into many small services that can be deployed and scaled independently of each other. In a monolithic architecture, all application components are integrated into a single unit, which can make their development, support, and scaling more complex.

Load Balancer

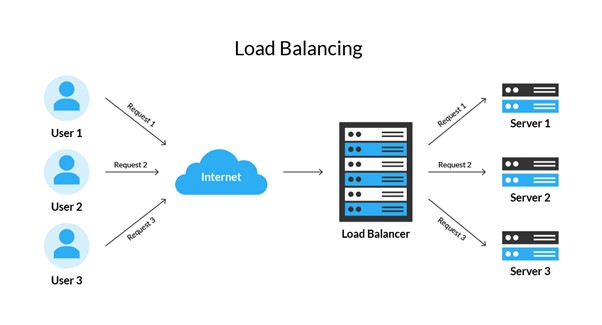

A load balancer is an infrastructure component designed to evenly distribute incoming traffic between multiple servers or resources. It acts as an intermediary between clients and servers, directing requests to one of the servers in the pool [3]. This allows you to achieve optimal utilization of resources, increase fault tolerance and ensure more efficient operation of the system in high-load conditions.

In high load services, the load balancer plays an extremely important role, ensuring an even distribution of requests between servers. This avoids overloads on individual nodes and provides horizontal scaling - the ability to add new servers to handle increasing load.

Fault tolerance is also an important aspect. If one of the servers fails, the balancer redirects traffic to the running nodes, minimizing the impact of the failure on the overall service availability.

However, they also have certain disadvantages. First, balancers can be a single point of failure (SPOF), which means that if the balancer itself fails, all traffic can be disrupted. This highlights the importance of high reliability and redundancy in the load balancer.

Additionally, the difficulty of setting up and maintaining the balancers can be high enough. The need to properly configure balancing parameters, manage sessions and handle different types of traffic requires special knowledge and effort.

Another aspect is the possibility of performance problems or bottlenecks when using a balancer. For example, if the balancer does not process requests quickly enough, it can become a bottleneck and create delays in customer service.

An important factor is also the need for efficient traffic routing, especially in multi-cluster and distributed environments. Incorrect routing strategy can result in uneven load distribution and performance degradation.

Message broker

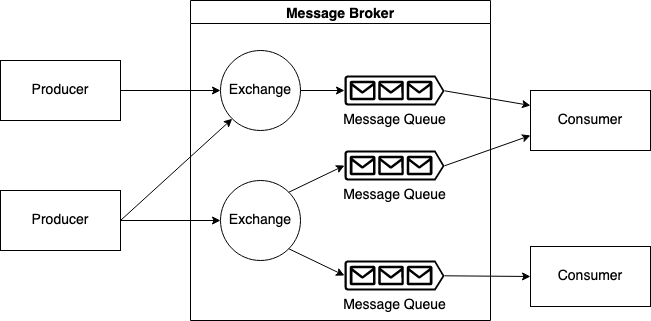

Message brokers are software components used to organize and manage the exchange of messages between different applications or services. Their goal is to provide asynchronous communication between different components of the system, solving problems with differences in processing rates and resource availability [4].

Under high load conditions, message brokers become a key element as they control data flow, provide scalability, and improve system resiliency. They help separate data producers and consumers, reducing the dependency between them and eliminating the need for synchronization.

Advantages of using message brokers include improved fault tolerance, ease of adding new services, and the ability to distribute data. However, these may entail additional infrastructure support costs and require careful design to avoid potential performance bottlenecks and problems.

Examples of message brokers are Apache Kafka, RabbitMQ, Apache ActiveMQ, and Amazon Simple Queue Service (SQS). These tools provide a variety of capabilities for efficient and reliable messaging in high-load systems.

Caching

Caching is a performance optimization technique used in high load services to temporarily store pre-calculated or received data to speed up access to this data in the future [5]. This mechanism reduces the load on resources such as databases or web servers by providing quick access to frequently requested information.

The advantages of using Caching in high load systems are very significant. Firstly, the speed of responding to requests increases, since the data is already in high-speed memory, avoiding the need to perform complex operations to extract information from sources. This is especially useful when processing repetitive requests, which leads to lower latency for the end user.

There are also disadvantages when using Caching. First, synchronization between the cache and the main data source can be a problem, since data changes made in the main database may not immediately be reflected in the cache itself. This can lead to data inconsistency if cache update mechanisms are not provided.

Another disadvantage is the consumption of additional memory to store the cache, which can be problematic in case of limited system resources.

In addition, incorrectly configuring the cache or using it for inappropriate data can lead to poor performance and consistency problems.

Nevertheless, with proper design and configuration, Caching becomes an effective tool for optimizing the operation of high load systems, ensuring a balance between access speed and data consistency.

Monitoring and analytics

Monitoring and analytics are an integral part of effective management of high-load services. Monitoring means systematic monitoring and control of the operation of various components of the service, allowing you to quickly identify problems and prevent possible failures [6]. On the other hand, analytics in the context of highly loaded systems is the analysis of data collected during the monitoring process in order to identify trends, optimize resources and make informed decisions. Ignoring problems and the inability to identify and eliminate them can be accompanied by a significant decrease in performance, negative user feedback and, as a result, the loss of the service audience.

The advantages of implementing monitoring and analytics include prompt response to incidents, providing valuable information to optimize resources, as well as the ability to prevent future problems. Also, thanks to analytics, teams can make informed decisions about scaling, performance improvement, and overall infrastructure optimization.

Despite the obvious advantages, the implementation of monitoring and analytics is also fraught with certain difficulties. This often requires additional resources, both in terms of technical infrastructure and personnel. Information overload and false positives can also be misleading and distract from real problems.

Examples of monitoring and analytics tools for high load services include Prometheus, Grafana, ELK (Elasticsearch, Logstash, Kibana), New Relic, Datadog and many others. These tools provide performance monitoring, log collection and analysis, query tracking and other functions, helping to ensure stable and efficient operation of highly loaded services.

Сonclusion

The architecture of high-load internet systems is a complex mechanism capable of efficiently processing vast amounts of data and requests, providing high execution speed and reliability when processing many user requests. It includes various approaches and components such as microservice architecture, load balancer, caching engine, message broker, and system metrics monitoring and visualization systems. This list is only part of the possible components and approaches used in the development of high-load Internet systems. It is important to note that each of them has its own advantages and disadvantages, so their effective use requires competent configuration, monitoring and analysis. Thus, we can say that a universal architecture suitable for all high-load internet systems does not exist. Ensuring the stable operation of such systems requires a balance between data processing speed, fault tolerance and monitoring efficiency for prompt response to emerging problems. Therefore, for each system, it is necessary to conduct an individual analysis and look for compromise solutions that meet its specific requirements.

References

1. Botashev, A. (2020). How to design a highload app. [online] Medium. Available at: https://medium.com/@alb.botashev/how-to-design-a-highload-app-2248b9022d3e [Accessed 22 Dec. 2023].

2. Ozkaya, M. (2023). Microservices Architecture. [online] Design Microservices Architecture with Patterns & Principles. Available at: https://medium.com/design-microservices-architecture-with-patterns/microservices-architecture-2bec9da7d42a [Accessed 22 Dec. 2023].

3. Wikipedia Contributors (2019). Load balancing (computing). [online] Wikipedia. Available at: https://en.wikipedia.org/wiki/Load_balancing_(computing) [Accessed 22 Dec. 2023].

4. Pubudu, N. (2021). Introduction to Message Brokers. [online] Nerd For Tech. Available at: https://medium.com/nerd-for-tech/introduction-to-message-brokers-bb5cacf9c70b [Accessed 22 Dec. 2023].

5. DEV Community. (2023). Implementing a distributed cache for high-performance web applications. [online] Available at: https://dev.to/musembi/implementing-a-distribution-cache-for-high-performance-web-applications-36hh [Accessed 22 Dec. 2023].

6. Weichbrodt, L. (2022). A Gentle Introduction to Backend Monitoring. [online] Medium. Available at: https://lina-weichbrodt.medium.com/a-hands-on-introduction-to-backend-monitoring-a40dd36bac01 [Accessed 22 Dec. 2023].